Machine learning (ML) technologies have proven their value in real-world manufacturing applications. However, many engineers and plant managers still think of ML as a nebulous, mysterious force floating around in the cloud somewhere that only data scientists can access. ML is not nebulous or inaccessible, but provide an automation advantage. While there are many approaches to ML projects, and more continue to emerge, the best way to implement ML in an industrial context is incorporating it directly into the controls environment. Consider these seven tips to get started.

1. Choose the right project for machine learning

Engineers and plant managers always should assess which types of applications are a good fit for ML and how they can effectively use ML. Just because ML is new to many, exciting and becoming more accessible does not mean ML is the panacea for every unsolved engineering challenge. ML implementations within the machine controller can offer huge innovation and competitive leads.

Applications for ML in machine control often fall into the category of application problems that are challenging to solve using traditional programming algorithms. If a possible application is identified, users should implement it as a prototype quickly and in an agile environment. The agility of the project team is a decisive factor. ML projects are, by nature, evolutionary and iterative processes. They do not fit into a tight, set framework. The general project flow starts with data collection, data preparation, model training, testing, more data collection, model refinement, etc., until the model produces optimal and reliable predictions.

Upgrading control platforms can protect against future obsolescence, increase system flexibility, enhance technology or meet budgetary or cost of ownership requirements.

2. Select an integrated software solution for ML

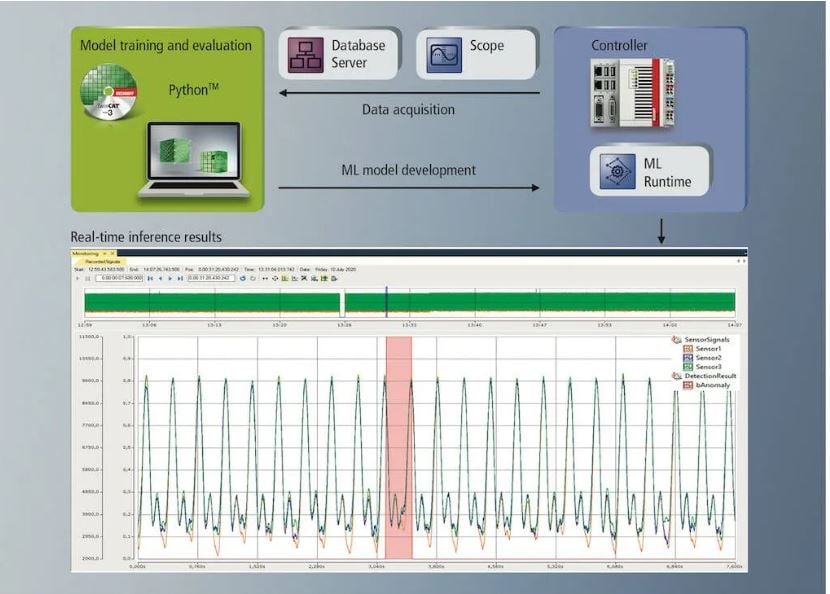

Since the goal is to run ML in a real-time controls environment, the more integrated a solution is, the better. This allows fast machine reaction times based on ML model predictions. Some vendors offer inference engines for ML models that are incorporated into the standard automation software, with the execution of models such as neural networks directly in the real-time environment.

An integrated approach supports nearly unlimited fields of machine application, such as ML-based solutions for quality control and process monitoring/optimization applications. For example, fully automated and system-integrated quality control applications based on existing machine data, such as motor currents, speeds and tracking errors, enable the machine controller to give accurate product quality predictions on 100% of goods produced. This works 24/7 and at a rapid cycle time.

Continuous process monitoring and process optimization are sequential steps: If a process can be monitored with a trained model, the machine can send a message to the machine operator to adjust the process to maintain quality. The next step is to learn from the experienced machine operator and train the model to offer parameterization suggestions or simply make the necessary parameter adjustments autonomously.

What's the difference between PC and PLC controls?

Get your free white paper debunking misconceptions about PC-based control vs PLC.

3. Understand the control hardware requirement

When most people think of ML, it brings up an association of needing very large computer hardware and many GPU cores. For training ML models with massive data sets, this significant computing power might be required. However, running the resulting trained models, that is, the inference, requires less-powerful hardware. On optimized solutions, the inference engine runs on the hardware side of powerful industrial PCs (IPCs), with access to the high computing capacity of modern CPU architectures. This approach makes the execution of learned models more efficient by using specialized CPU command set extensions in combination with optimized CPU cache memory management. Also, the trend towards more and more processor cores per CPU supports the accelerated execution of neural networks.

A close look at the trained model is always necessary. Just like “handwritten” source code, it could be large and inefficient, which creates longer execution times than lean and optimized source code. The ML models should always be adapted and optimized to the task. With the right hardware and solid source code, it’s possible to execute neural networks in the microseconds range with a standard PC-based machine controller.

A large Chinese food company uses TwinCAT Machine Learning to achieve the highest possible quality level in the packaging of instant noodles. This image shows the standard ML workflow and an anomaly detected and displayed in TwinCAT Scope View the system integrator used to implement the solution.

4. Start planning early, but keep exploring options

An ML project is an evolutionary process. Engineers should start as early as possible in the value chain at the machine builder. More refined model predictions take significant data for training. More data often means more refined and accurate models. Therefore, not every application will have the most optimal solution for a new machine design when first put into operation at an end customer.

However, during the machine lifecycle, new relevant data can be collected and evaluated. This helps ML models constantly improve. To support this process, some vendors set up the inference engine so it can load new models on the fly without stopping the machine, restarting the PLC or recompiling source code.

Many applications already have an existing machine with a controller that does not include ML functionality. End users want the machine to optimize their production and are now considering ML use. In these cases, an open-control machine-control concept plays a decisive role. Choose a solution that readily connects to third-party controls through various interfaces. In many cases, this can allow ML implementation on an older machine by installing an IPC that has read access to the essential data from the existing controller and hosts the inference for implementing, for example, inline product quality predictions.

Beckhoff introduced TwinCAT ML at Hannover Messe 2019, and since then, the number of industrial applications using trained neural networks has continued to grow exponentially.

5. ML projects require collaboration among data and domain engineering projects

Since machine knowledge and database expertise are critical in ML applications, the project team should be made up of several different experts. The main player is the domain expert, that is, a mechanical engineer or the expert for linear actuators or the forming process. The domain expert wants to solve a specific ML challenge so they have a goal in mind and know the interrelationships in their machines. Next is the data scientist, who is responsible for data analysis. Working together, these two must define essential machine variables, which is important for the defined goal. The data scientist works closely with the domain expert to shed light on the meaning of certain data patterns and behaviors.

A data scientist alone, without feedback from the domain expert, lacks the know-how to make these projects effective. Some machine builders already have data science departments, possibly only an individual resource, and take on this task themselves. Others need more intensive support, which is available from some vendors or specialized automation integrators. Factor the level of support needed into which solution to pick.

6. Protect IP while gathering ML training data

A resulting trained ML model can have a huge impact for a company’s competitiveness. There also has been engineering and collaboration between experts of different domains and specialists and lots of collected data. Therefore, when working on ML projects, always have the necessary sense of proportion regarding data and IP protection. After the ML models are trained, they will be deployed to production or end user facilities. The trained model can, and in most applications should, be protected from being copied and used in an unauthorized manner. These software protection mechanisms in some platforms can protect not only ML models, but also PLC source code and deployed code.

If data are continuously collected from the machine and the classification result is also saved, the data scientist and the domain expert can subsequently analyze in more detail precisely those process sequences in which an anomaly was detected.

7. Understanding how to train ML models to detect anomalies

It can be hard to train for anomalies when engineers don’t know what they are. Many paths can lead to the destination. A simple, easy-to-describe example is to train a classification model with only one known class – the “no anomaly” class. Present the model in training with only data that contains no anomalies, and define this set of data as “class A.” When the algorithm is used in the process, it recognizes the “class A” again. However, it also recognizes when the data have some other unknown structure, and then reports an unspecified anomaly.

It bears repeating: ML is an evolutionary process. If data are continuously collected from the machine and the classification result is also saved, then the data scientist and the domain expert can analyze in more detail those process sequences in which an anomaly was detected.

If necessary, a model update can then be used for anomaly detection and to narrow down the detected case in more detail. As users continue gaining experience and understand the tools, they will see how many opportunities and approaches there are to automate machine performance and quality optimizations with machine learning.

.png?width=76&height=76&name=Untitled%20(100%20x%20100%20px).png)

%20(1).png?width=1000&height=500&name=Untitled%20(1000%20%C3%97%20500%20px)%20(1).png)

.png?width=1000&height=500&name=Untitled%20(1000%20%C3%97%20500%20px).png)